Prompt Engineering 101

AI Learnings Software CraftsmanshipAre you feeling lost in the new world of AI tools and Prompt Engineering? Don’t worry, we got you! In this article, we’ll demystify everything and equip you with the knowledge you need to get the most out of AI tools like ChatGPT. So, let’s get started!

What Is Prompt Engineering?

Prompt engineering is like being the conductor of an AI orchestra. It’s the art of crafting carefully tailored instructions, or prompts, that guide language models like GPT-3.5 (Used in ChatGPT) to produce the outputs we desire. Think of it as giving your AI companion the perfect set of directions to create the results you’re looking for.

In this article, we’ll explore the fundamental principles of prompt engineering and how they can be applied in practice.

Principle 1: Write clear and specific instructions

The clarity and specificity of instructions greatly influence the output generated by the model. Here are some guidelines to follow:

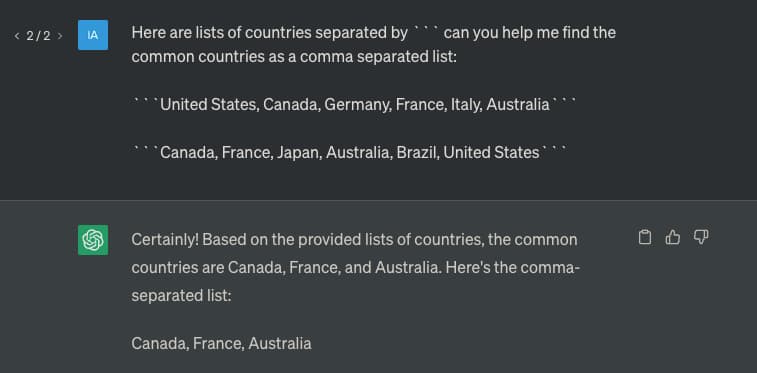

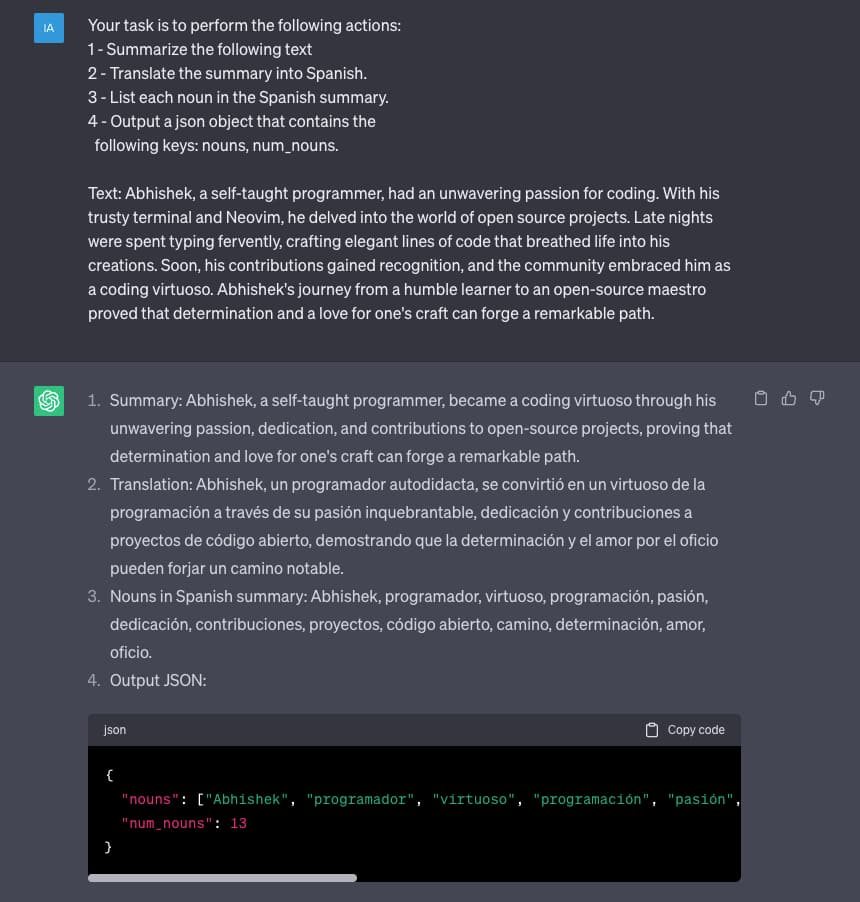

- Use delimiters to clearly indicate distinct parts of the input. Delimiters can be anything like triple backticks (```) or semicolon (;). For example, when providing multiple inputs, you can separate them using delimiters to ensure the model processes them correctly.

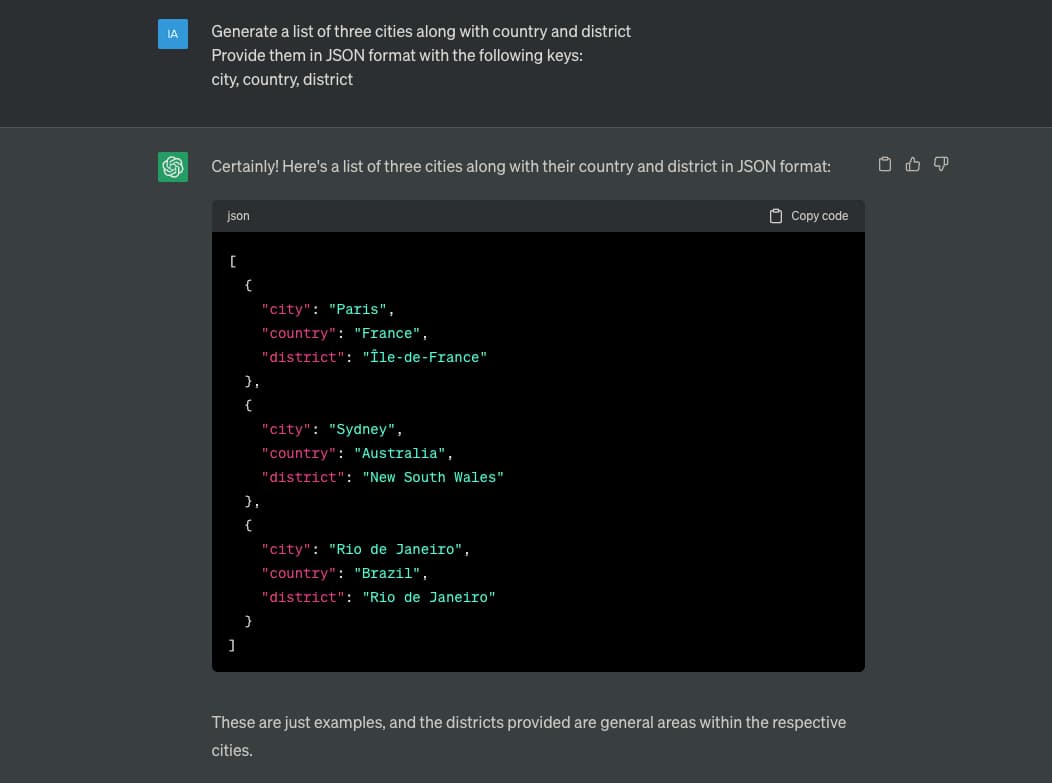

- Ask for a structured output format such as JSON or HTML. This helps in obtaining well-organized and easily parseable results.

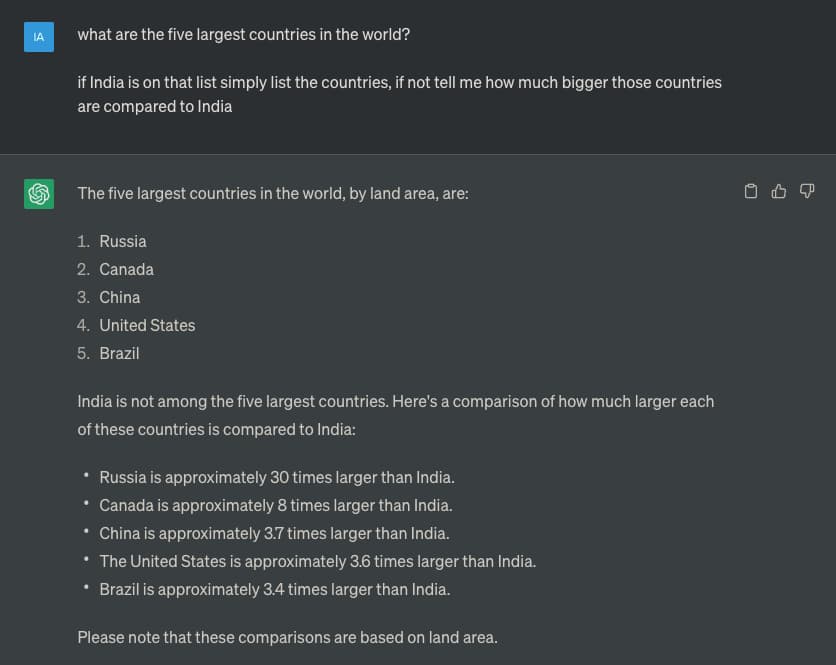

- Instruct the model to check whether certain conditions are satisfied. This allows you to incorporate logical checks in the output.

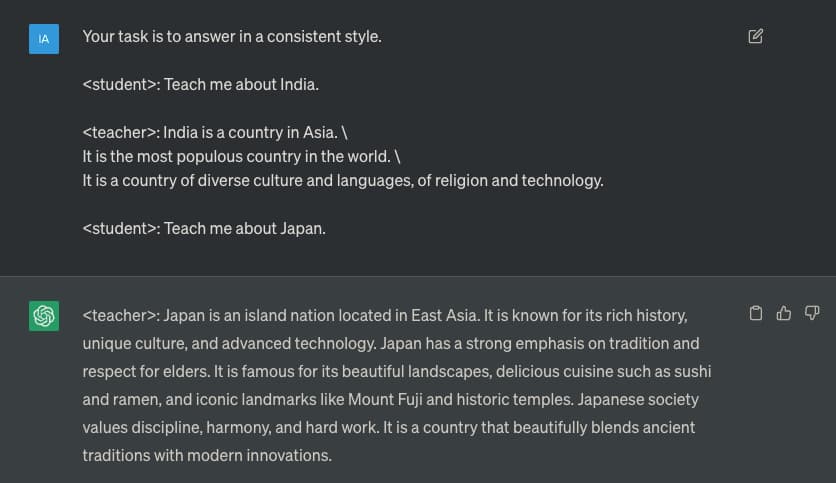

- Utilize the power of “few-shot” prompting. Instead of asking the model to perform a task from scratch, provide an example or a partial solution and ask it to do something similar. This enables the model to generalize from the given example and produce the desired output.

By clearly specifying the desired output format and providing structured inputs, we increase the likelihood of obtaining the desired JSON response.

Principle 2: Give the model time to “think”

Language models like GPT-3.5 need time to process and generate outputs, especially for complex tasks. To facilitate this, follow these guidelines:

- Specify the steps required to complete a task. Number and list the steps clearly in your instructions. This helps the model understand the desired process and ensures a logical flow of information.

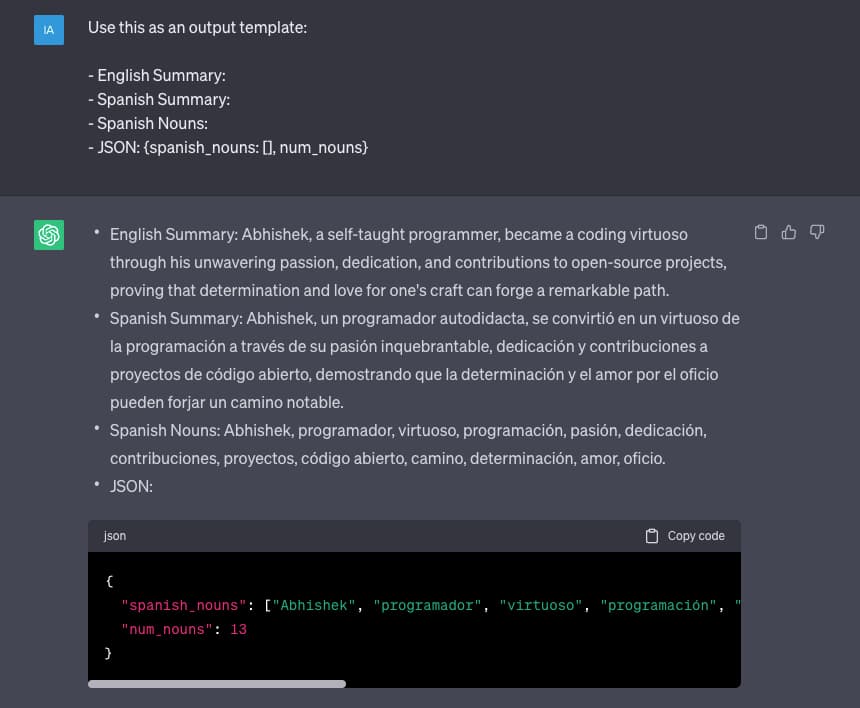

- Ask for output in a specified format and provide an output template. By providing a template, you guide the model to generate output that adheres to the desired structure. For example, if you want the model to generate a formatted email, provide a template with placeholders for the recipient, subject and body.

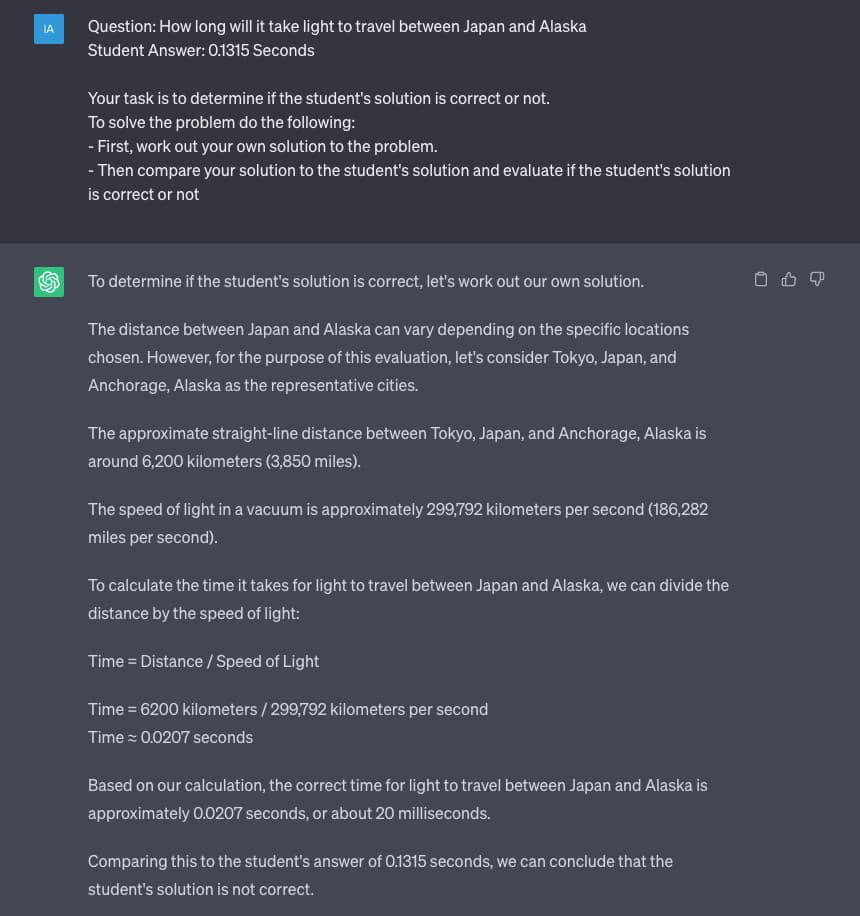

- Instruct the model to work out its own solution before rushing to a conclusion. Allowing the model to ponder and analyze the problem can lead to more thoughtful and accurate outputs.

By specifying the steps clearly and providing a template for the output, we enable the model to generate a well-structured guide.

Iterative Prompt Development

Prompt engineering is an iterative process. It involves refining and improving prompts based on the model’s outputs and analysis. Here’s a suggested approach for iterative prompt development:

-

Start with a clear and specific implementation of your idea, following the prompting principles mentioned earlier.

-

Analyze the generated outputs and identify any discrepancies or issues.

-

Refine your idea, and the prompt by further clarifying the instructions or addressing any ambiguities that may have led to undesired outputs.

-

Enhance prompts with a batch of examples. Providing additional examples helps the model learn and generalize better, resulting in improved outputs.

-

Repeat the process until the desired results are achieved. Iteration allows for continuous refinement and optimization of prompts.

Capabilities of Prompt Engineering

Prompt engineering empowers us to leverage the capabilities of language models effectively. Here are a few key capabilities and their applications:

-

Summarizing: Language models can summarize larger pieces of text to their essential points. This can be customized to focus on specific topics or aspects within the text. For instance, you can prompt the model to summarize a news article while emphasizing the key events.

-

Inferring: Models can understand the content and make inferences. This capability is valuable for tasks like sentiment analysis, topic extraction, grammar checks, and more. For example, you can ask the model to determine the sentiment of a customer review or identify the primary topic in a given text.

-

Transforming: Language models can transform content from one form to another. This includes tasks like format conversion (e.g., JSON to HTML), language translation, or even changing the mood or tone of the text. For instance, you can prompt the model to translate an English sentence to French or convert a CSV file to JSON format.

-

Expanding: Models can generate large content from smaller inputs, allowing for the generation of articles, emails, lists, and more. For example, you can provide the model with a few bullet points and ask it to expand them into a full-length article.

Get Prompting!

That’s all folks, you now have all the fundamentals you need to go out there and craft your own prompts. Use this knowledge to unleash the power of prompt engineering and watch your AI companion create wonders at your command.

This article is a condensed version of this course by Isa Fulford and Andrew Ng, you can take a look to explore more and try different examples.